Image copyright

Image copyright

Getty Photography

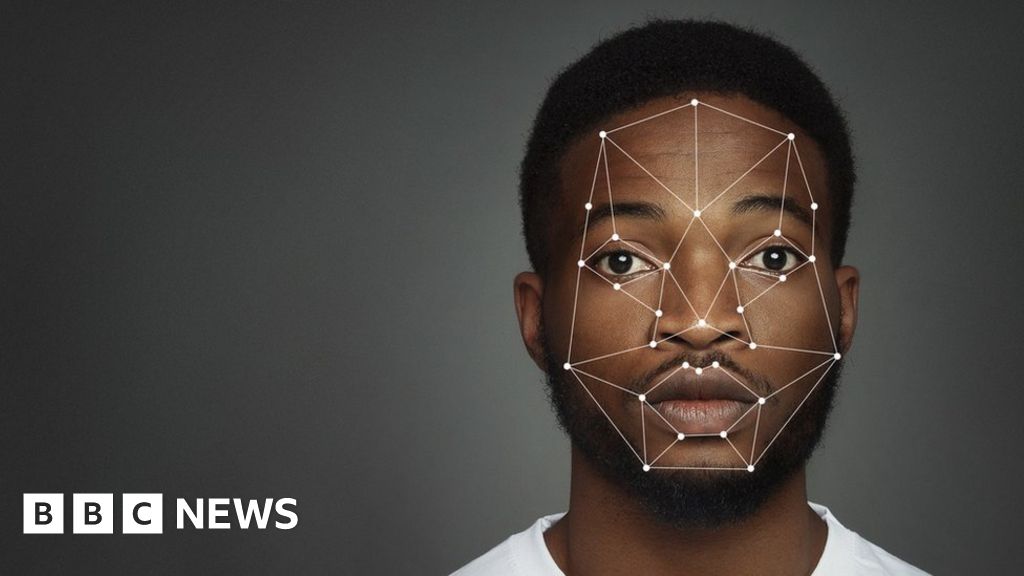

A US government behold suggested facial recognition algorithms had been much less correct at figuring out African-American faces

Tech giant IBM is to forestall offering facial recognition utility for “mass surveillance or racial profiling”.

The announcement comes because the US faces calls for police reform following the killing of a shaded man, George Floyd.

In a letter to the US Congress, IBM said AI systems dilapidated in regulation enforcement critical testing “for bias”.

One campaigner said it was a “cynical” movement from a agency that has been instrumental in constructing expertise for the police.

In his letter to Congress, IBM chief govt Arvind Krishna said the “struggle against racism is as pressing as ever”, environment out three areas where the agency desired to work with Congress: police reform, responsible exercise of workmanship, and broadening talents and tutorial alternatives.

“IBM firmly opposes and is now not going to condone the uses of any expertise, alongside with facial recognition expertise supplied by rather a pair of distributors, for mass surveillance, racial profiling, violations of standard human rights and freedoms,” he wrote.

“We factor in now is the time to initiate a nationwide dialogue on whether and the method facial recognition expertise wants to be employed by domestic regulation enforcement agencies”.

Reasonably than counting on potentially biased facial recognition, the agency urged Congress to exercise expertise that might maybe seemingly elevate “elevated transparency”, equivalent to body cameras on law enforcement officers and knowledge analytics.

Records analytics is extra integral to IBM’s swap than facial recognition products. It has also worked to safe expertise for predictive policing, which has also criticised for seemingly bias.

‘Let’s now not be fooled’

Privacy World’s Eva Blum-Dumontet said the agency had coined the term “aesthetic city”.

“All all the method by the enviornment, they pushed a model or urbanisation which relied on CCTV cameras and sensors processed by police forces, as a result of the classy policing platforms IBM was promoting them,” she said.

“Here is why is it is miles very cynical for IBM to now flip around and notify they need a nationwide dialogue relating to the usage of workmanship in policing.”

She added: “IBM are attempting to redeem themselves because they had been instrumental in increasing the technical capabilities of the police by the building of so-called aesthetic policing tactics. But let’s now not be fooled by their newest movement.

“First of all, their announcement was ambiguous. They discuss ending ‘standard reason’ facial recognition, which makes me notify it’ll also now not be the cease of facial recognition for IBM, it will correct be customised within the long speed.”

The Algorithmic Justice League was one in every of the most main activist groups to bid that there had been racial biases in facial recognition knowledge objects.

A 2019 behold performed by the Massachusetts Institute of Abilities came all the method by that now not one in every of the facial recognition instruments from Microsoft, Amazon and IBM had been 100% correct when it came to recognising ladies and males folks with darkish skin.

And a behold from the US National Institute of Standards and Abilities suggested facial recognition algorithms had been some distance much less correct at figuring out African-American and Asian faces when compared with Caucasian ones.

Amazon, whose Rekognition utility is dilapidated by police departments within the US, is one in every of essentially the most racy avid gamers within the topic, nonetheless there are also a host of smaller avid gamers equivalent to Facewatch, which operates within the UK. Clearview AI, which has been told to forestall the usage of photos from Fb, Twitter and YouTube, also sells its utility to US police forces.

Maria Axente, AI ethics knowledgeable at consultancy agency PwC, said facial recognition had demonstrated “well-known ethical dangers, essentially in bettering present bias and discrimination”.

He added: “In insist to comprise believe and resolve critical points in society, reason as unparalleled as profit wants to be a key measure of performance.”